Usability Testing

Usability testing is a common UX activity conducted during design activities. In a usability test, participants perform test tasks against several metrics. These metrics include completion times, successes, errors, etc. When tests are done correctly, the results provide ballpark predictions about the user population. When done incorrectly, their predictive power is no better than random chance.

As of 2020, usability testing has become a common activity within many organizations. Organizations now budget the resources necessary to conduct tests, and provide access to end users. Prior to 2020, however, organizations were not so committed to these activities, and often discouraged them. Few organizations provided budgets or access to end users, and internal politics often discouraged feedback from external sources. Testing occurred, but it was infrequent, or conducted only by senior management request.

Much of my career occurred during this era, and I only have a few work examples for review. I’ve included examples from my Master’s Thesis to demonstrate the academic training I received in Research Design. Training in Research Design is important to ensure that test activities are conducted in a valid manner which yields useful results.

Academic Examples

Cellular Telephone Dialing Performance

This series of studies investigates the dialing performance of several cellular telephone design attributes among members of the U.S. population, including those with disabilities. This was a mixed factors study with the following independent variables:

Within Subject Factors

- Key Stroke (3 levels)

- Key Height (3 levels)

- Key Separation (3 levels)

Between Subject Factors

- Disability (5 levels)

Fifteen participants completed 256 trials each, representing a mix of different dialing tasks. Tasks were completed using four telephone models, presented in a partially counterbalanced order.

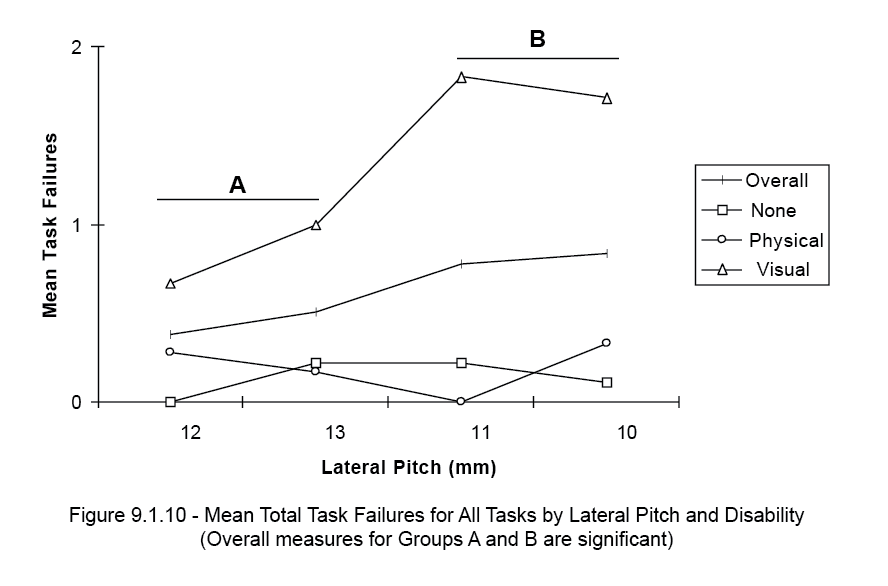

Data were analyzed using a series of one-way ANOVA’s. Significant effects were found for various design attribute by disability levels.

Cellular Telephone Dialing Preferences

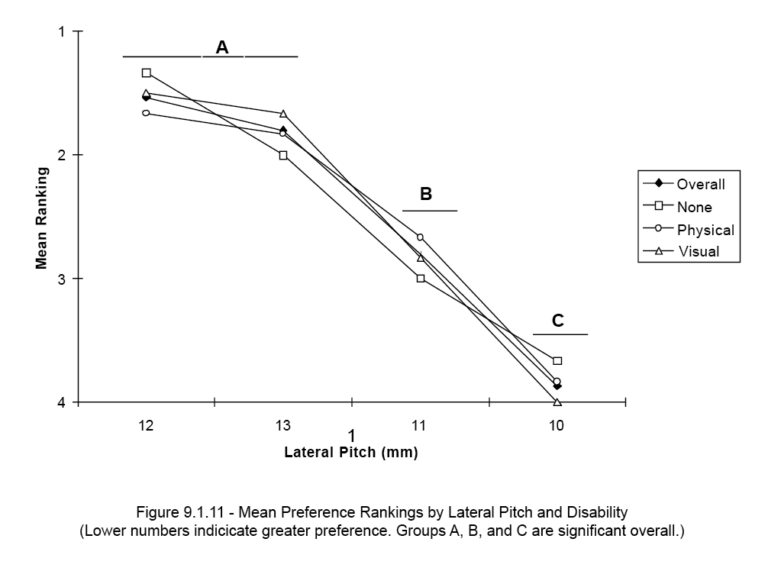

This study investigated preferences related to several cellular telephone design attributes amongst a population of U.S. consumers, especially those with physical and visual disabilities. This test was a mixed factors design, with the following independent variables:

Within Subject Factors

- Key Stroke (3 levels)

- Key Height (3 levels)

- Key Separation (3 levels)

- Power Key Position (8 levels)

- Send/End Key Locations (8 levels)

Between Subject Factors

- Disability (5 levels)

Fifteen participants completed the study. Participants were asked to complete a number of common dialing tasks during test trials. These tasks included 7-digit, 11-digit, 911, and stored memory recall dialing.

Nine telephone models were presented in a partially counterbalanced order. Participants were asked to provide rank orders and preference data using paper surveys.

The results differed by attribute and disability, with significant effects. Details for each attribute are included in separate files within this portfolio.

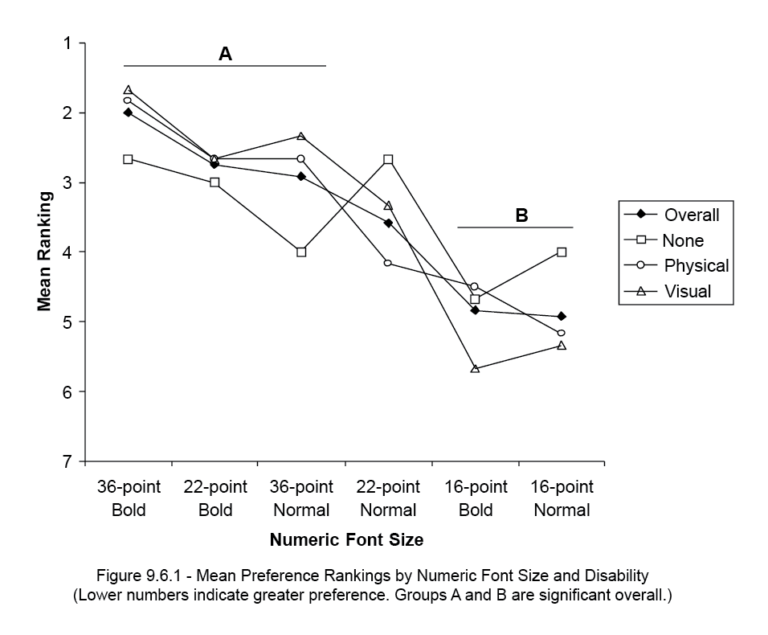

Cellular Telephone Display Attribute Preferences

This study investigated preferences related to several cellular telephone design attributes amongst a population of U.S. consumers, especially those with physical and visual disabilities. This test was a mixed factors design, with the following independent variables:

Within Subject Factors

- Numeric Font Size (6 levels)

- Alphabetic Font Size (3 levels)

Between Subject Factors

- Disability (5 levels)

Fifteen participants completed the study. Participants were asked to complete a number of common dialing tasks during test trials. These tasks included 7-digit, 11-digit, 911, and stored memory recall dialing.

Nine telephone models were presented in a partially counterbalanced order. Participants were asked to provide rank orders and preference data using paper surveys.

The results differed by attribute and disability, with significant effects. Details for each attribute are included in separate files within this portfolio.

Notebook Computer Preference Studies

This study investigated the usability and accessibility of two popular notebook computer models amongst a population of U.S. consumers, including those with physical and visual disabilities. Participants used desktop computers exclusively, and had no familiarity with notebook computers prior to the study.

Within Subject Factors

- Computer Model (2 levels)

Between Subject Factors

- Disability (4 levels)

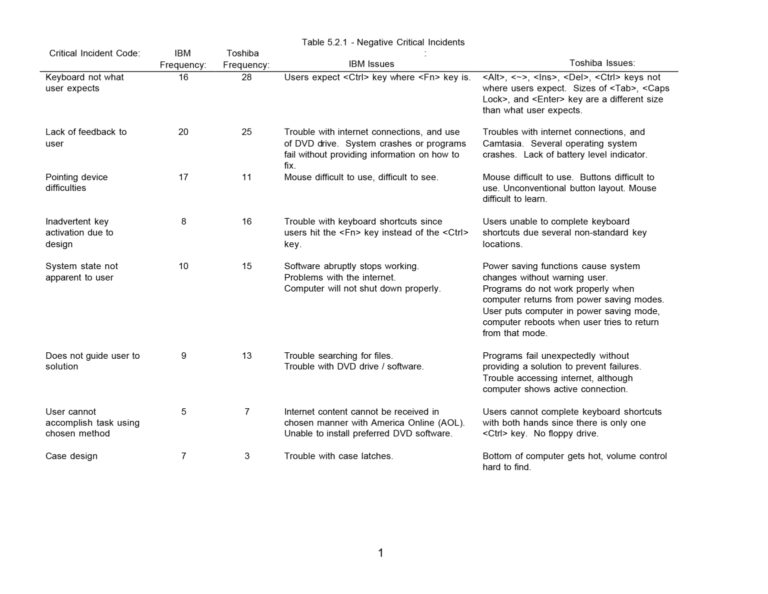

Eight participants used each computer for a 30 day loan period. After completing both loan periods, participants were asked to rate their preferences for different design attributes using various rating instruments, including the Questionnaire for User Interface Satisfaction (QUIS), a standardized usability questionnaire.

During each loan period, participants recorded “critical incidents” as they occurred, trying to recreate them for analysis. Surveys evaluating computer features were completed when the computer was returned. A new model was assigned, and the participants repeated the process. At the conclusion of both loan periods participants completed a series of surveys on model preference.

Data were analyzed using a series statistical techniques. Results were presented as a series of design and accessibility recommendations.

Work Examples

Salvaging a Usability Test

When organizations conduct usability tests correctly, the value of the results exceeds expenditures. Business questions are answered with valid data, and the business receives accurate direction.

Other times, however, testing activities are conducted with no business questions to answer. Activities occur just for the sake of conducting the activities. That’s what happened in this example.

To make a long story short, another individual conducted a usability test. I was asked to supply materials for them to use to test the product I supported. However, the other individual, who designed and facilitated the test, had no background in Research Design. The test procedure and results lacked sufficient validity for informing business decisions beyond random chance. Regardless, I was asked to salvage what I could from this data and produce recommendations for the product I supported.

The results report is organized by task number and coded by problem. Participant think-aloud statements were gathered for each task and organized into critical incident categories. Each category has a count of participant statements within that category.

The recommendations example is based on one particular application area. Improved test design would not have changed the outcome of these recommendations.

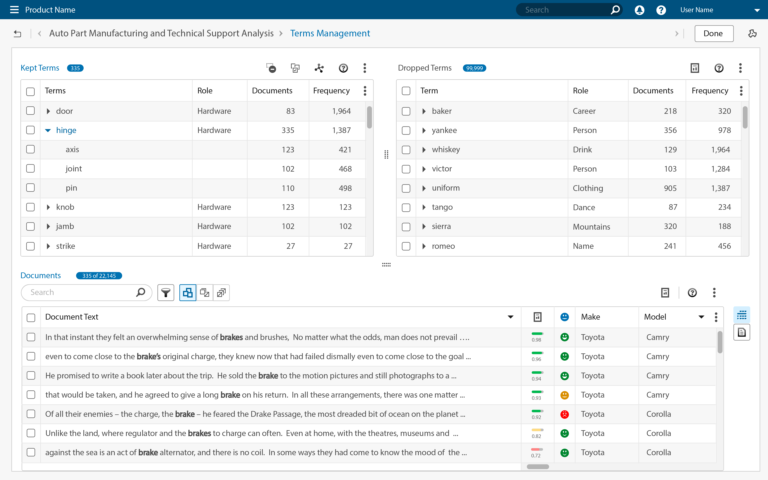

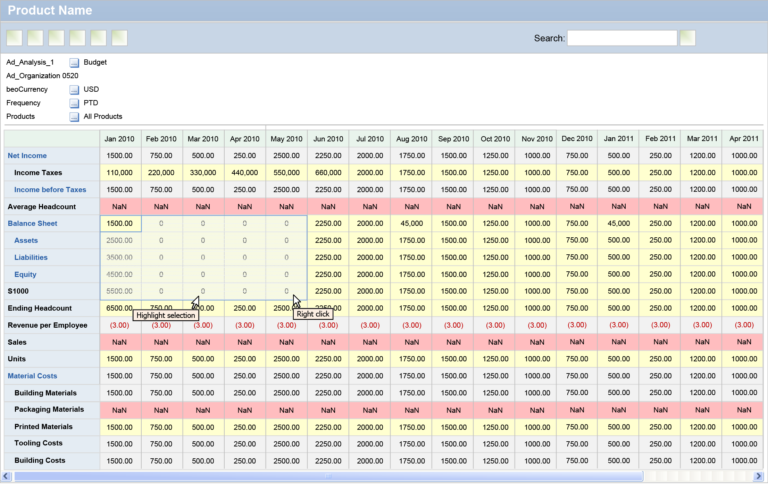

Form Data Entry

This is a finance application used by large organizations to collect and report on accounting-related data. Users enter data Data is entered by users at all levels of the organization using either a thick-client interface, or a web-based extension. The system centralizes and aggregates the data, making it available for planning, budgeting, and reporting activities.

This usability test evaluated a series of tasks for the thick-client and web-based data entry interfaces for this application. Corporate-level employees enter data using the thick-client interface, as the application is installed locally to these users. Users at lower-levels of the organization enter data through a web-based extension.

Six Business Analysts were recruited from the local area to participate. Participants performed a series of familiarization tasks using Excel before proceeding with test tasks. Participants provided “think-aloud” statements while completing tasks. The data was collected, analyzed, and reported on in the attached documents.

Participant sessions were transcribed and analyzed to obtain critical incident reports. Participants had numerous complaints and they struggled with the tasks. Task performance metrics (completion times, errors, etc.) were initially recorded, then abandoned as they added little beyond the critical incident reports.

The attached recommendations and presentation were provided to the Development team for review. A prioritized recommendation spreadsheet was also provided, but that example is not available.

3rd Generation Mail Delivery Vehicle

In the early 2000’s, the U.S. Postal Service Vehicle Unit investigated options for reducing fleet operation costs. One option was to replace aged delivery vehicles with new vehicles. New vehicles could provide many cost saving benefits related to fuel economy, operations, repairs, accidents, and carrier injuries.

After a lengthy process, the USPS awarded a contract to a major vehicle supplier to produce designs for an updated vehicle. The updated vehicle would be substantially different than current vehicles, and include several updates to address areas of concern. A static prototype was delivered it to the USPS Vehicle unit for study and evaluation.

As one of the Human Factors Engineers supporting the project, my role was to study the usability of the prototype with a small population of mail carriers and report these findings to the USPS Vehicle unit to incorporate into future design or production contracts.

In-Vehicle Communication System

One of the unique characteristics of the U.S. Postal Service is that its delivery vehicles travel to nearly every address in the U.S. multiple times per week. While this means little to the average individual, it may mean a lot to a business seeking particular types of data. In this case, it’s cellular signal strength data in a burgeoning cellular market.

As part of a larger business deal, an unknown company and the USPS studied the feasibility of equipping USPS vehicles with data gathering equipment. In return, the USPS would receive a communication system that could offer operational improvements. This test is a usability evaluation of that proposed system.

In the proposed system, USPS delivery vehicles would be equipped with in-vehicle communication device which reported to a system installed within Postal Delivery Units. With this system, Mail Carriers and Postal Units would have some form of communication and tracking during route delivery. This was before cellular telephones were common personal accessories.